Results for ""

(This piece was first published on Medium. Read here)

Ati’s autonomous technology works together with humans and other equipment or vehicles in customer locations so this becomes an important aspect for us. The paper was accepted for publication in a top ranked conference: Association for the Advancement of Artificial Intelligence (AAAI 2021).

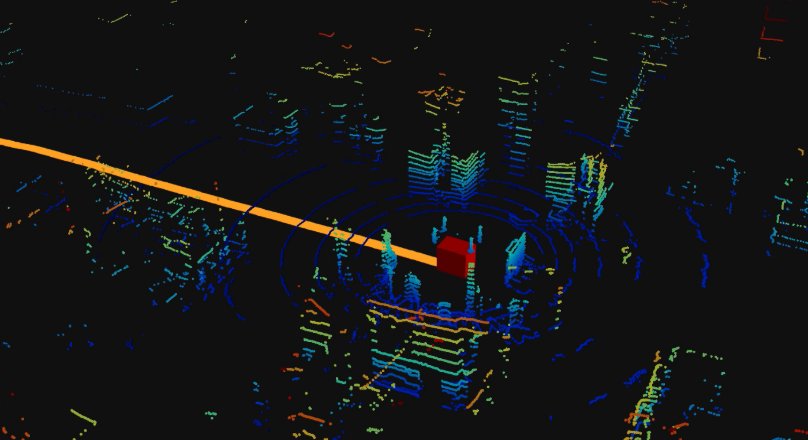

Specifically, we have shown that the maps generated in dynamic environments are more corrupt than a map generated in static environments. This work attempts to solve this problem by transforming a given dynamic LiDAR scan to a corresponding static LiDAR scan.

We pose the problem of Dynamic to Static Translation (DST) for LiDAR scans. We seek to learn the mapping from dynamic scans to the corresponding static scan to minimize the reconstruction loss. We focus on 2 two significant goals: accurately reconstructing the static structures like walls or poles and inpainting the occluded regions with a static background. Works performing DST for images and object point clouds [1–5], when adapted to our problem, produce suboptimal reconstructions for LiDAR data. LiDAR reconstruction works [6] come closest to our work to generate LiDAR scans using generative modeling. However, the reconstruction obtained using this work is not good enough for SLAM.

To address these shortcomings, we propose a Dynamic to Static LiDAR Reconstruction (DSLR) algorithm. It uses an autoencoder that is adversarially trained using a discriminator to reconstruct corresponding static frames from dynamic frames. Unlike existing DST-based methods, DSLR doesn’t require specific segmentation annotation to identify dynamic points. Additionally, if segmentation information is available, we extend DSLR to DSLR-Seg to further improve the reconstruction quality.

We train DSLR using a dataset that consists of corresponding dynamic and static scans of the scene. However, these pairs are hard to obtain in a simulated environment and even harder to obtain in the real world. To get around this, we propose two solutions:

1) Using Unsupervised Domain Adaptation, we propose DSLR-UDA for transfer to real-world data and experimentally show that this performs well in real-world settings.

2) A new paired-dataset generation algorithm generates appropriate dynamic and static pairs, making DSLR the first DST-based model to train on a real-world dataset.

Experiments on simulated and real-world datasets show that DSLR gives at least a 4x improvement over adapted baselines on the problem of DST for LiDAR. We also show that DSLR, unlike the existing baselines, is a practically viable model with its reconstruction quality within the tolerable limits for autonomous navigation tasks like SLAM in dynamic environments.

Please refer to our paper, project pages (link1 and link2), open-sourced code, and datasets.

References:

[1] Bescos et al. 2019. “Empty Cities: Image Inpainting for a Dynamic-Object-Invariant Space”. ICRA 2019.

[2] Chen et al. 2020. “Unpaired Point Cloud Completion on real scans using adversarial training”. ICLR 2020.

[3] Groueix et al. 2018. “AtlasNet: A Papier-Mâché Approach to Learning 3D Surface Generation”. CVPR 2018.

[4] Wu et al. 2020 “Multimodal Shape Completion via Conditional Generative Adversarial Networks”. ECCV 2020.

[5] Achlioptas et al. “Learning Representations and Generative Models for 3D Point Clouds”. ICML 2018.

[6] Caccia et al. 2019. “Deep Generative Modeling of LiDAR Data”. IROS 2019.