Results for ""

It was a bright summer morning. The crimson rays of smiling sun were kissing the tops of lofty trees and towers. A fresh breeze and a hilarious choir of birds were flooding the atmosphere at the meditation center at Dharamshala in the Himalayas. The striking similarity between the Deep Learning AI and Buddhist wisdom dawned upon me while attending the Buddhist meditation program at the center. For over 2,500 years, Buddhism has investigated the mind which is non-matter & different from the brain although both are interconnected and interdependent. Buddhist wisdom is key to the mind. Despite extensive research by the neuroscientists on the anatomy and functioning of the brain, there is very little understanding of the mind, thought, consciousness, intuition, and intelligence. Artificial Neural Networks miss out on brain to mind translation. The performance of Deep Learning AI, which mimics the biological brain, can be bolstered by assimilating Buddhist learning.

Deep Learning, the name given to Artificial Neural Networks was conceived in the 1940s. However, it was only a few years back that the intuitive (learning through experience) cognitive system using continuous mathematics complemented the classical Artificial Intelligence based on the rational (programmed) cognitive system. This empowerment has been a source of AI’s spectacular achievements in the last half-decade. But there is some disillusionment now. There have been wondrous near-human or superhuman feats of AI in different fields such as board & strategy games, image recognition, natural language processing, and autonomous cars. Deep Learning employs heavy math, it is more like alchemy than chemistry. Only part of its high-performance solutions can be explained rationally, the rest is instinctual, a diverging path from human intelligence. But due to its monstrous data crunching, inscrutable black-box operation, inability to apply abstract concepts to different situations, and difficult to engineer models, there is the fear of Deep Learning approaching a wall.

Deep Learning AI process stumbles on Buddhist Wisdom Tools

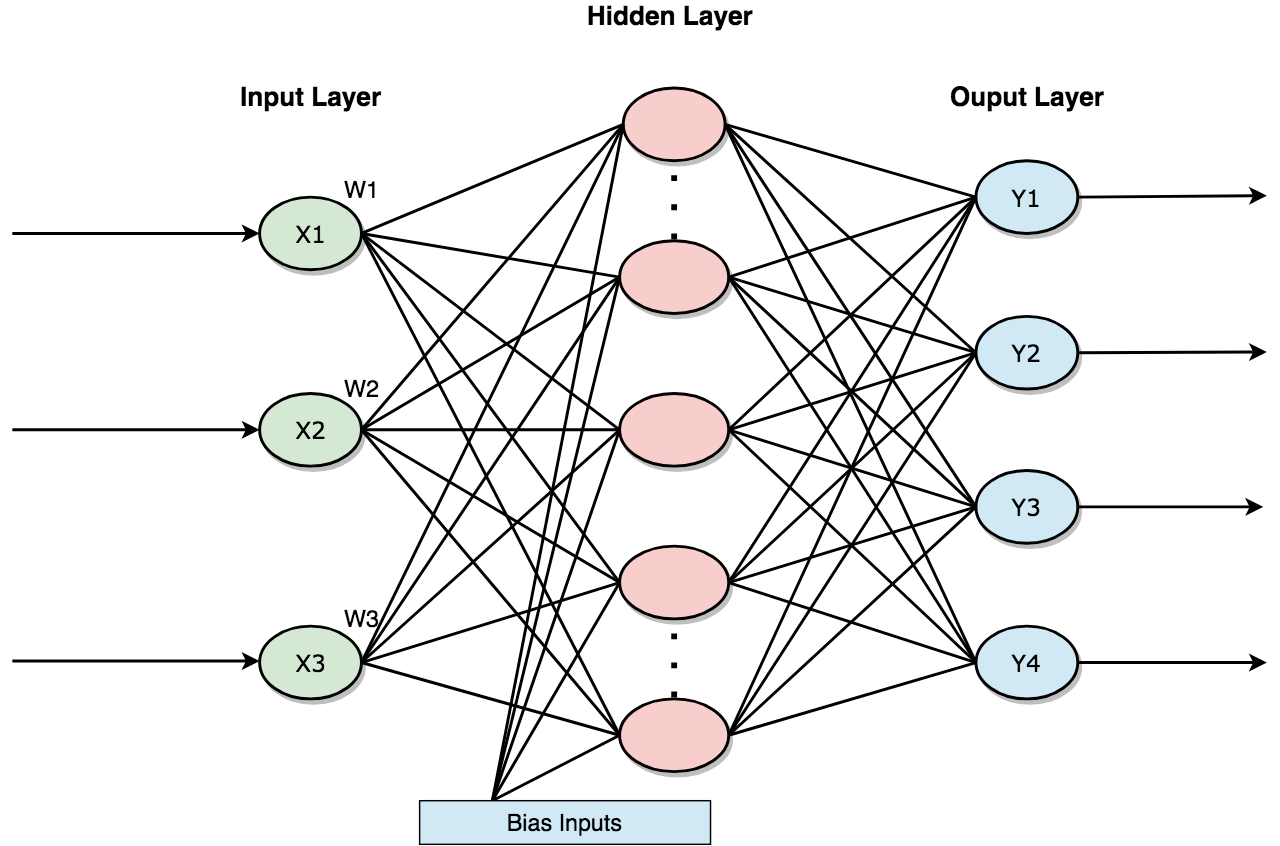

Deep Learning, a statistical process, mimics the mammalian brain. The artificial neurons (perceptron) are simplified homogeneous digital versions of biological neurons (Figure 1). The human brain has about 100 billion neurons and 1000 trillion synapses (neural connections). The artificial neural networks differ to a large degree from brain structure in the number of neurons & synapses, shape, size & pattern of activation of neurons, innate structure, generating and inserting new neurons in existing circuits, and selectively using particular areas.

Figure 1 — The Biological Inspiration and Artificial Neuron

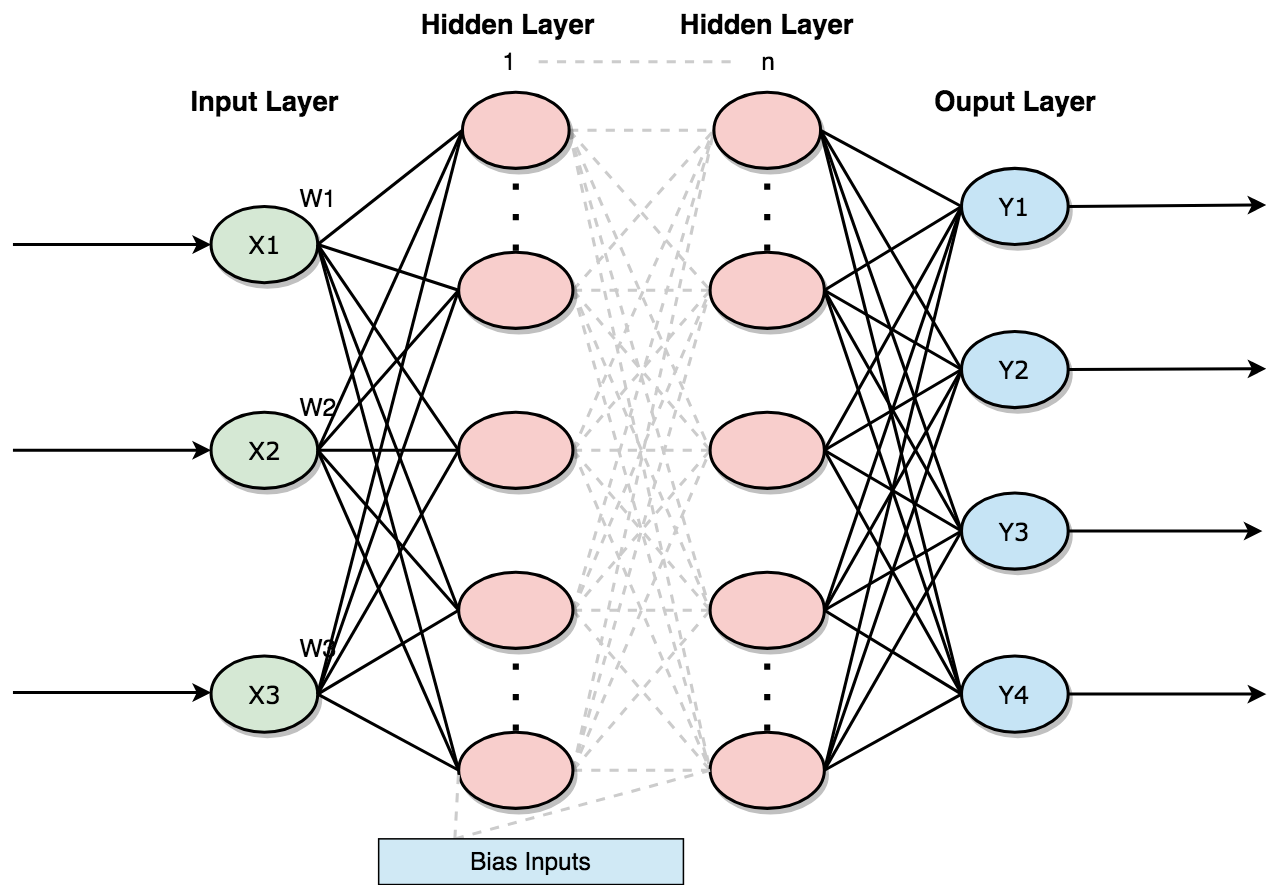

In the Artificial Neural Networks, the neurons (perceptrons) are arranged in input, hidden, and output layers. The input data is assigned weight and bias as it reaches another neuron in the adjacent layer. This input passes through an activation function and the output goes to next layer neurons. The process goes on until artificial neuron triggers reach the output layer. Here they are subjected to optimization (loss) and back-propagation algorithms. The actual and desired outputs are compared and the difference is propagated back to the input layer to optimize weights and biases. The iterative process continues until the near desired output is achieved. The structures of ‘Shallow’ and ‘Deep’ neural networks are illustrated in Figure 2. After optimizing weights and biases, the Artificial Neural Network model provides more accurate output parameters for training, validating, and testing the model. Sub-sequential tasks can learn from transfer learning. Deep Learning algorithms require millions of training examples. Ingesting tons of quality data, Deep Learning AI is still far short of human capabilities of reasoning, understanding, and common-sense.

Figure 2 — Shallow & Deep Neural Networks

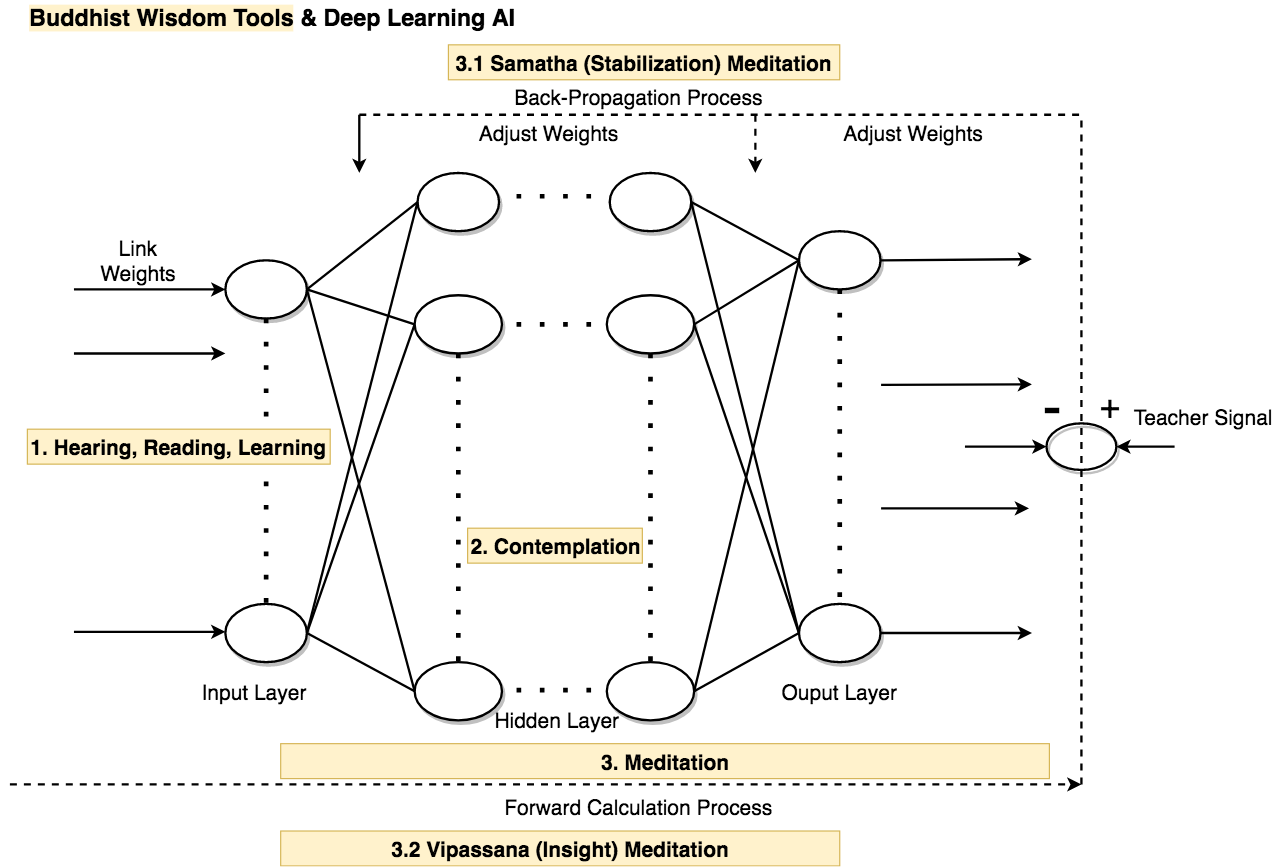

Buddhist wisdom (prajna) comprises three tools namely hearing (sutras), contemplation (Cinta), and meditation (Bhavana). For intellectual understanding, we have to hear, learn, and study (sutras). We can internalize this knowledge through the wisdom of contemplation and reflection (Cinta). Based on these two pearls of wisdom, we begin to practice meditation (Bhavana) further awakening our inner understanding. During the stabilization (Samatha) meditation and insight (vipassana) meditation the brain is the object of a cognitive process that is turned inward rather than outward. The values are assigned to certain states to increase their prevalence. The synaptic connectivity changes accordingly. It is somewhat similar to what happens in the learning process through interactions with the outer world. The three Buddhist wisdom tools are symbolically represented in Figure 3.

Figure 3 — Hearing, Contemplation, and Meditation for Brain-Mind Translation

Deep Learning seems to have taken a leaf out of Buddhist wisdom. The practice of Deep Learning comprising of input, assigning weights & bias, optimization and output are similar to Buddhist wisdom tools of hearing, contemplation, stabilization (Samatha) meditation, and insight (vipassana) meditation. Whereas stabilization meditation covers loss function and back-propagation perturbations until optimization, insight meditation is like getting outputs giving inputs to a trained model. This correspondence, in-process steps, is illustrated in Figure 4 below.

Figure 4 — Deep Learning Steps follow Buddhist Wisdom Tools (Image created by Saurabh Saxena)

Buddhist learning to bolster Deep Learning

Deep Learning AI’s dependence on massive data and complex computations is the source of its woes like ‘black box’ nature (not knowing which inputs or links are important), inability to use abstract concepts for different applications (due to maze of data), and difficult to engineer models (unwieldy number of layers and neurons in each layer and difficulty in selection of activation and loss function algorithms especially in case of complex problems). Integrating Buddhist learning, AI will use less data, minimize large mathematical operations, and combine the algorithms with human experience and intuition to solve problems like climate change and incurable diseases facing humanity. The following finding named after AI researcher Hans Moravec is relevant in this context.

Moravec’s paradox - Contrary to traditional assumptions, high level reasoning requires very little computation, but low-level sensorimotor skills require enormous computational resources.

The assimilation of Buddhist learnings in AI is a complex subject. It will require a coordinated effort of Buddhist scholars, neuroscientists, and AI specialists to identify how Buddhist learnings will benefit the current approach we are taking in AI and devise methodologies for their integration. Some of the representative examples are listed below.

- Incorporating Innate Brain Structure: A child, unfamiliar with Buddhist practices, is capable of reasoning and understanding scriptures using small data. This innate brain structure is present at birth in the child’s brain. AI has to acquire a semblance of it to be closer to nature rather than depending on nurture. The brain circuitry indicates a wide variety of neurons to shape neural transmission and information processing. There are different types of neurons like motor, sensory, and interneuron, and the ones having synaptic connectivity with their neighbor, local, and long-distance neurons. Beyond early processing stages in perception, vision, hearing, etc., to capture later stage cognitive steps some heterogeneity in neuron types and connectivity has to be introduced in the over-simplistic uniform neurons in the artificial neural networks.

- Precedence of Outer Layers using Sparse Topology: Neuroscientists have studied the brain structure of long time practitioners of Buddhist wisdom tools. The cortex, the outermost layer of the brain’s biological networks, has neurons both with local and long-range connections. It has been observed that the monks develop cortical thickening associated with increased neuron density & number of synapses in the brain regions associated with attention & sensory processing. In the AI network topology, it will translate to giving precedence to outer layers over the hidden layers. The standard architecture of Artificial Neural Networks with fully connected layers should be modified. The synapses between feed-forward layers will be removed (sparse topology dropouts) and synapses that jump several layers can be created. A fully generic network topology that allows any node connected to any other with synapses, will facilitate outer layers getting precedence over hidden layers.

- Using Part of Deep Neural Architecture: Buddhist meditators develop significant left-sided brain activation. This signifies that only a particular area instead of a wider brain region or the whole brain is used in solving most of the problems. As a departure from traditional forward feed topology, Artificial Neural Networks could use generic interconnected networks allowing the use of a particular area of the network. The processing units for such sparse Deep Neural Topology are under development.

The integration of benefits from Buddhist wisdom tools in Deep Learning AI is feasible with available and in some cases under development processors. For example, as proof of the concept ‘Precedence of Outer Layers using Deep Neural Sparse Topology’ can be implemented to solve a simple real-world problem using current technology. We can thus compare solutions obtained through AI integrating Buddhist wisdom tools with results from traditional Deep Learning AI and actual measurements. Such a transformed intuitive reasoning machine, using Buddhist learning, will be scalable to address bigger problems like climate change using minimal data endorsing Moravec’s observation that greater computational capability does not lead to more intelligent systems.

Conclusion

Deep Learning AI in the present form ingests and analyzes a huge amount of data in its hidden layers to train a model. As the complexity of models increases, it becomes difficult to interpret how they work. It is not practical to solve complex problems using opaque models. Deep Learning AI resembles Buddhist learning and can empower itself by assimilating Buddhist wisdom tools. The architecture, key algorithms, optimization, and analysis of the model inspired by Buddhist wisdom will require fewer data and build explainable and holistic technical solutions to big problems like disease and climate change. This less artificial and more intelligent AI will allay fears of another AI winter and AI posing an existential threat to humans.