Results for ""

“Don’t ask what computers can do, ask what they should do.”

That is the title of the chapter on AI and ethics in a book I coauthored with Carol Ann Browne in 2019. At the time, we wrote that “this may be one of the defining questions of our generation.” Four years later, the question has seized center stage not just in the world’s capitals, but around many dinner tables.

As people use or hear about the power of OpenAI’s GPT-4 foundation model, they are often surprised or even astounded. Many are enthused or even excited. Some are concerned or even frightened. What has become clear to almost everyone is something we noted four years ago—we are the first generation in the history of humanity to create machines that can make decisions that previously could only be made by people.

Countries around the world are asking common questions.

- How can we use this new technology to solve our problems?

- How do we avoid or manage new problems it might create?

- How do we control technology that is so powerful?

These questions call not only for broad and thoughtful conversation, but decisive and effective action.

Earlier this year, the global population exceeded eight billion people. Today, one out of every six people on Earth live in India. India is experiencing a significant technological transformation that presents a tremendous opportunity to leverage innovation for economic growth. This paper offers some of our ideas and suggestions as a company, placed in the Indian context.

To develop AI solutions that serve people globally and warrant their trust, we’ve defined, published, and implemented ethical principles to guide our work. And we are continually improving engineering and governance systems to put these principles into practice. Today, we have nearly 350 people working on responsible AI at Microsoft, helping us implement best practices for building safe, secure, and transparent AI systems designed to benefit society.

New opportunities to improve the human condition

The resulting advances in our approach to responsible AI have given us the capability and confidence to see ever-expanding ways for AI to improve people’s lives. By acting as a copilot in people’s lives, the power of foundation models like GPT-4 is turning search into a more powerful tool for research and improving productivity for people at work. And for any parent who has struggled to remember how to help their 13-year-old child through an algebra homework assignment, AI-based assistance is a helpful tutor.

While this technology will benefit us in everyday tasks by helping us do things faster, easier, and better, AI’s real potential is in its promise to unlock some of the world’s most elusive problems. We’ve seen AI help save individuals’ eyesight, make progress on new cures for cancer, generate new insights about proteins, and provide predictions to protect people from hazardous weather. Other innovations are fending off cyberattacks and helping to protect fundamental human rights, even in nations afflicted by foreign invasion or civil war. We are optimistic about the innovative solutions from India that are included in Part 3 of this report. These solutions demonstrate how India’s creativity and innovation can address some of the most pressing challenges in various domains such as education, health, and environment.

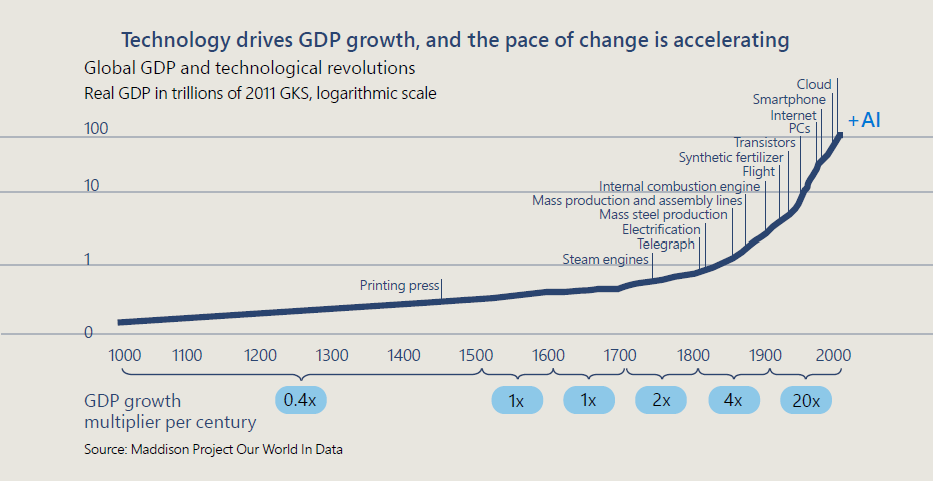

In so many ways, AI offers perhaps even more potential for the good of humanity than any invention that has preceded it. Since the invention of the printing press with movable type in the 1400s, human prosperity has been growing at an accelerating rate. Inventions like the steam engine, electricity, the automobile, the airplane, computing, and the internet have provided many of the building blocks for modern civilization. And like the printing press itself, AI offers a new tool to genuinely help advance human learning and thought.

Guardrails for the future

Another conclusion is equally important: it’s not enough to focus only on the many opportunities to use AI to improve people’s lives. This is perhaps one of the most important lessons from the role of social media. Little more than a decade ago, technologists and political commentators alike gushed about the role of social media in spreading democracy during the Arab Spring. Yet five years after that, we learned that social media, like so many other technologies before it, would become both a weapon and a tool—in this case aimed at democracy itself.

Today, we are 10 years older and wiser, and we need to put that wisdom to work. We need to think early on and in a clear-eyed way about the problems that could lie ahead.

We also believe that it is just as important to ensure proper control over AI as it is to pursue its benefits. We are committed and determined as a company to develop and deploy AI in a safe and responsible way. The guardrails needed for AI require a broadly shared sense of responsibility and should not be left to technology companies alone. Our AI products and governance processes must be informed by diverse multistakeholder perspectives that help us responsibly develop and deploy our AI technologies in cultural and socioeconomic contexts that may be different than our own.

When we at Microsoft adopted our six ethical principles for AI in 2018, we noted that one principle was the bedrock for everything else—accountability. This is the fundamental need: to ensure that machines remain subject to effective oversight by people and the people who design and operate machines remain accountable to everyone else. In short, we must always ensure that AI remains under human control. This must be a first-order priority for technology companies and governments alike.

This connects directly with another essential concept. In a democratic society, one of our foundational principles is that no person is above the law. No government is above the law. No company is above the law, and no product or technology should be above the law. This leads to a critical conclusion: people who design and operate AI systems cannot be accountable unless their decisions and actions are subject to the rule of law.

In many ways, this is at the heart of the unfolding AI policy and regulatory debate. How do governments best ensure that AI is subject to the rule of law? In short, what form should new law, regulation, and policy take?

A five-point blueprint for the public governance of AI

Building on what we have learned from our responsible AI program at Microsoft, we released a blueprint in May that detailed our five-point approach to help advance AI governance. In this version, we present those policy ideas and suggestions in the context of India. We do so with the humble recognition that every part of this blueprint will benefit from broader discussion and require deeper development. But we hope this can contribute constructively to the work ahead. We offer specific steps to:

- Implement and build upon new government-led AI safety frameworks.

- Require effective safety brakes for AI systems that control critical infrastructure.

- Develop a broader legal and regulatory framework based on the technology architecture for AI.

- Promote transparency and ensure academic and public access to AI.

- Pursue new public-private partnerships to use AI as an effective tool to address the inevitable societal challenges that come with new technology.

More broadly, to make the many different aspects of AI governance work on an international level, we will need a multilateral framework that connects various national rules and ensures that an AI system certified as safe in one jurisdiction can also qualify as safe in another. There are many effective precedents for this, such as common safety standards set by the International Civil Aviation Organization, which means an airplane does not need to be refitted midflight from Delhi to New York.

As the current holder of the G20 Presidency and Chair of the Global Partnership on AI, India is well positioned to help advance a global discussion on AI issues. Many countries will look to India’s leadership and example on AI regulation. India’s strategic position in the Quad and efforts to advance the Indo-Pacific Economic Framework present further opportunities to build awareness amongst major economies and drive support for responsible AI development and deployment within the Global South.

Working towards an internationally interoperable approach to responsible AI is critical to maximizing the benefits of AI globally. Recognizing that AI governance is a journey, not a destination, we look forward to supporting these efforts in the months and years to come.

Governing AI within Microsoft

Ultimately, every organization that creates or uses advanced AI systems will need to develop and implement its own governance systems. Part 2 of this paper describes the AI governance system within Microsoft—where we began, where we are today, and how we are moving into the future.

As this section recognizes, the development of a new governance system for new technology is a journey in and of itself. A decade ago, this field barely existed. Today Microsoft has almost 350 employees specializing in it, and we are investing in our next fiscal year to grow this further.

As described in this section, over the past six years we have built out a more comprehensive AI governance structure and system across Microsoft. We didn’t start from scratch, borrowing instead from best practices for the protection of cybersecurity, privacy, and digital safety. This is all part of the company’s comprehensive Enterprise Risk Management (ERM) system, which has become a critical part of the management of corporations and many other organizations in the world today.

When it comes to AI, we first developed ethical principles and then had to translate these into more specific corporate policies. We’re now on version 2 of the corporate standard that embodies these principles and defines more precise practices for our engineering teams to follow. We’ve implemented the standard through training, tooling, and testing systems that continue to mature rapidly. This is supported by additional governance processes that include monitoring, auditing, and compliance measures.

As with everything in life, one learns from experience. When it comes to AI governance, some of our most important learning has come from the detailed work required to review specific, sensitive AI use cases. In 2019, we founded a sensitive use review program to subject our most sensitive and novel AI use cases to rigorous, specialized review that results in tailored guidance. Since that time, we have completed roughly 600 sensitive use case reviews. The pace of this activity has quickened to match the pace of AI advances, with almost 150 such reviews taking place in the last 11 months.

All of this builds on the work we have done and will continue to do to advance responsible AI through company culture. That means hiring new and diverse talent to grow our responsible AI ecosystem and investing in the talent we already have at Microsoft to develop skills and empower them to think broadly about the potential impact of AI systems on individuals and society. It also means that much more than in the past, the frontier of technology requires a multidisciplinary approach that combines great engineers with talented professionals from across the liberal arts.

At Microsoft, we look to engage stakeholders from around the world as we develop our responsible AI work to ensure it is informed by the best thinking from people working on these issues globally and to advance a representative discussion on AI governance. As one example, earlier in 2023, Microsoft’s Office of Responsible AI partnered with the Stimson Center’s Strategic foresight hub to launch our Global Perspectives Responsible AI Fellowship. The purpose of the fellowship is to convene diverse stakeholders from civil society, academia, and the private sector in Global South countries for substantive discussions on AI, its impact on society, and ways that we can all better incorporate the nuanced social, economic, and environmental contexts in which these systems are deployed.

A comprehensive global search led us to select fellows from Africa (Nigeria, Egypt, and Kenya), Latin America (Mexico, Chile, Dominican Republic, and Peru), Asia (Indonesia, Sri Lanka, India, Kyrgyzstan, and Tajikistan), and Eastern Europe (Turkey). Later this year, we will share outputs of our conversations and video contributions to shine light on the issues at hand, present proposals to harness the benefits of AI applications, and share key insights about the responsible development and use of AI in the Global South.

All this is offered in this paper in the spirit that we’re on a collective journey to forge a responsible future for artificial intelligence. We can all learn from each other. And no matter how good we may think something is today, we will all need to keep getting better.

As technology change accelerates, the work to govern AI responsibly must keep pace with it. With the right commitments and investments that keep people at the center of AI systems globally, we believe it can.

Sources of Article

https://blogs.microsoft.com/on-the-issues/2023/08/23/indias-ai-opportunity/