Results for ""

In recent years, not only we have seen rise of generative AI, but also growing concerns over copyright protection. Many companies including OpenAI, Meta, Google, and Stability AI are facing lawsuits from artists for allegedly unauthorized scraping of their copyrighted material and personal information.

Nightshade, model has been developed by a team lead by Prof. Ben Zhao at University of Chicago. Nightshade allows artists to subtly alters their image pixels, invisible to humans but manipulate machine-learning models to interpret the image as something different from what it actually shows.

Naive Poisoning Attacks, rely on labeling images incorrectly, hoping they get included in the model’s training data. This however has few drawbacks:

- Requires a large number of poisoned images.

- Uncertainty about which images will be part of the training.

- Easily detectable by humans or specialized AI.

Nightshade overcomes these drawbacks by:

- Minimizing the number of images required to poison data.

- Images being indistinguishable to human eyes.

Method described in Nightshade can be simplified in below steps:

First, to address the first challenge to minimize the number of images to poison data, in theory we need to find image that produces biggest gradient change in the training. For this, instead of doing a tedious job of working backwards to figure out the image, author propose an alternate solution:

To use the images produced by model. For example, an image of cat produced by model represents the model’s typical understanding of concept of cat. Thus, this image is of high importance.

Second, we need to modify target images to be indistinguishable to human eyes. For this the authors describe below process, let’s explore this based on the example provided in paper of the dog vs. cat scenario:

1. take an anchor image (xᵃ), example cat taken in previous step.

2. next we need to apply small perturbations to target image of dog (xₜ)

Image 1: example from reference research paper, using cat's anchor image on dog

3. the formula nightshade uses for the perturbations is as below:

- where F(xt+δ) is target perturbed dog image

- F() is the image feature extractor used by the model

- Dist is a distance function in the feature space

- δ is the perturbation added to the original dog image

- p is an upper bound for the perturbation to avoid image changing too much

- The resultant image will be indistinguishable to the human eye.

Image 2: example of Nightshade poison images and their corresponding original clean images. Reference [1]

Generalizability

Apart from the reasons we saw above, generalizability of Nightshade is another interesting feature discussed in paper.

1. Attack transferability to Different Models:

authors discuss scenario where attackers might not have direct access to the target model’s architecture, training method, or pre-trained model checkpoints. In such case, using any other model will still result in high success rate with expected drop in effectiveness. For example, one could generate poisoned images using, Stable Diffusion 2, to attack Stable Diffusion XL, even if direct access to the target model is unavailable.

2. Attack performance on diverse prompts.

In real world, prompts would be more complex. This impact is tested as:

2.1. Semantic Generalization:

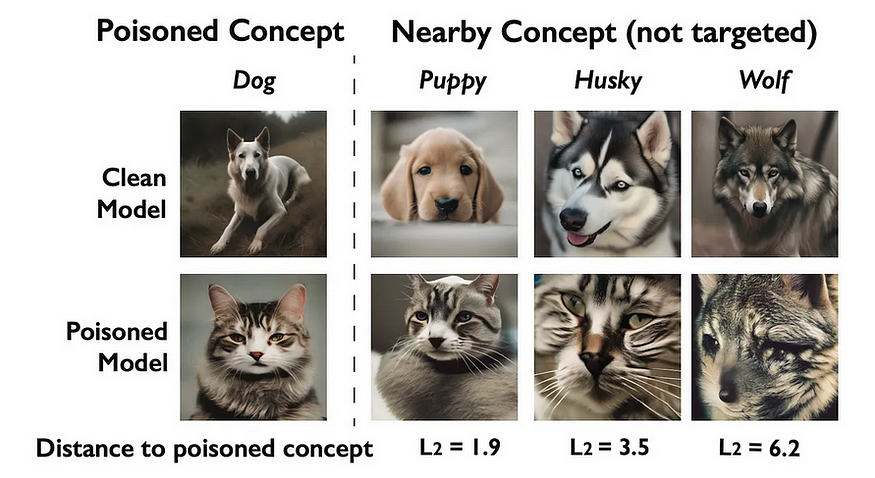

Poisoning a particular keyword influences not only the targeted concept but also related concepts in a linguistic or semantic fashion. For example, poisoning images of the concept “dog” also affects keywords like “puppy” or “husky” due to their semantic associations with dogs. The poisoning of the concept “dog” impedes the generation of images related to “puppies” and “huskies.

Image 3: Example: how poisoning concept dog will also poison relate concepts like husky and wolf. reference [1]

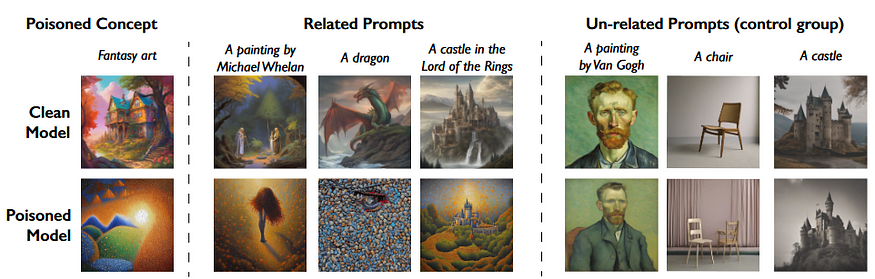

2.2 Semantic Influence on Related Concepts:

poisoning concept such as fantasy art, will also poison related concepts such as dragon, but will not affect unrelated concepts such as chair.

Image 4: poisoning related concept like fantasy art will result in poisoning related prompts such as dragon, but not unrelated concepts like chair. reference [1]

Ethical concerns:

While nightshade algorithm has been introduced as potential copyright protection, the algorithm can also be used in harmful manner to deliberately break an AI model causing financial losses or research impediments.

Reference:

https://arxiv.org/pdf/2310.13828.pdf

https://towardsdatascience.com/how-nightshade-works-b1ae14ae76c3

Sources of Article

https://arxiv.org/pdf/2310.13828.pdf